StyleGAN-Canvas: An augmented encoder for human-AI co-creation

Type

Generative Deep Learning Framework

Materials

PyTorch, CUDA toolkit, Generative Adversarial Networks

Display

Presented in the 4th HAI-GEN Workshop at the ACM Intelligent User Interfaces Workshops (ACM IUI 2023), March 2023, Sydney, Australia.

Mar, 2023

Abstract

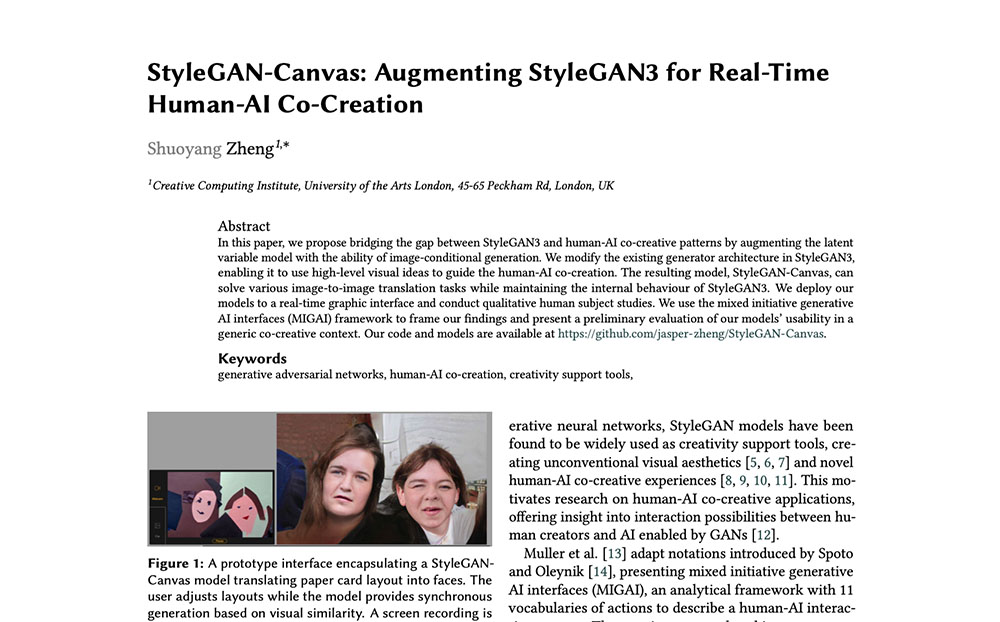

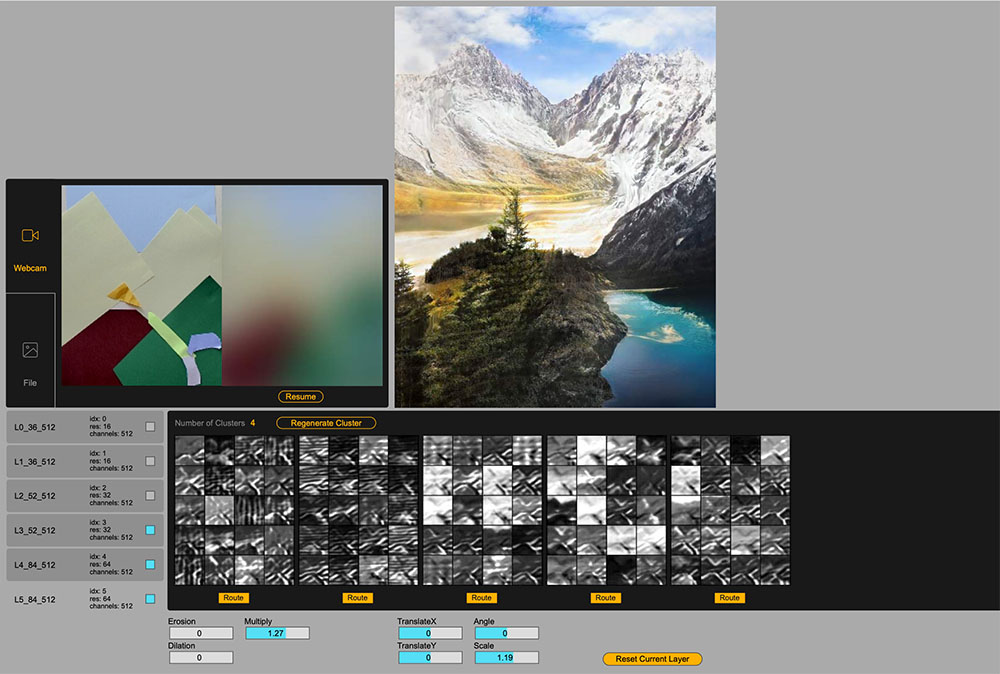

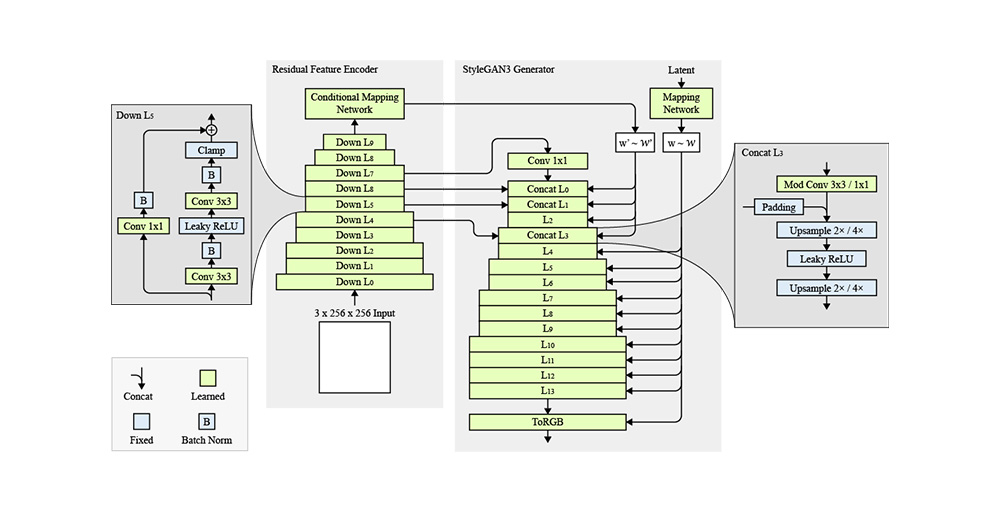

In this paper, we propose bridging the gap between StyleGAN3 and human-AI co-creative patterns by augmenting the latent variable model with the ability of image-conditional generation. We modify the existing generator architecture in StyleGAN3, enabling it to use high-level visual ideas to guide the human-AI co-creation. The resulting model, StyleGAN-Canvas, can solve various image-to-image translation tasks while maintaining the internal behaviour of StyleGAN3. We deploy our models to a real-time graphic interface and conduct qualitative human subject studies. We use the mixed initiative generative AI interfaces (MIGAI) framework to frame our findings and present a preliminary evaluation of our models’ usability in a generic co-creative context.