My work explores the development of AI systems through their convergence with practices of media and arts, in regard to the inherited philosophical, ethical, and aesthetical implications.

Latent Terrain Synthesis: Walking in AI audio latent spaces

A coordinates-to-latents mapping model for neural audio autoencoders, can be used to build a mountainous and steep surface map for navigating an autoencoder's latent space.

Category Research Subject Creative AI Date Jun, 2025

Soundwalking in Audio Latent Space

An algorithmic strategy for meaningfully unfolding a neural synth model's latent space into a two-dimensional plane. The unfolded structure is a streamable "latent terrain", one can create sound pieces with greater spectral complexity by tracing a linear path through it.

Category Research Subject Creative AI Date Oct, 2024

Sketch-to-Sound Mapping with Interactive Machine Learning

Exploring interactive (personalised) constructions of mappings between visual sketches and musical controls. An prototype that leverage unsupervised feature learning to generate latent mapping using interactive machine learning.

Category Research Subject Creative AI Date Feb, 2024

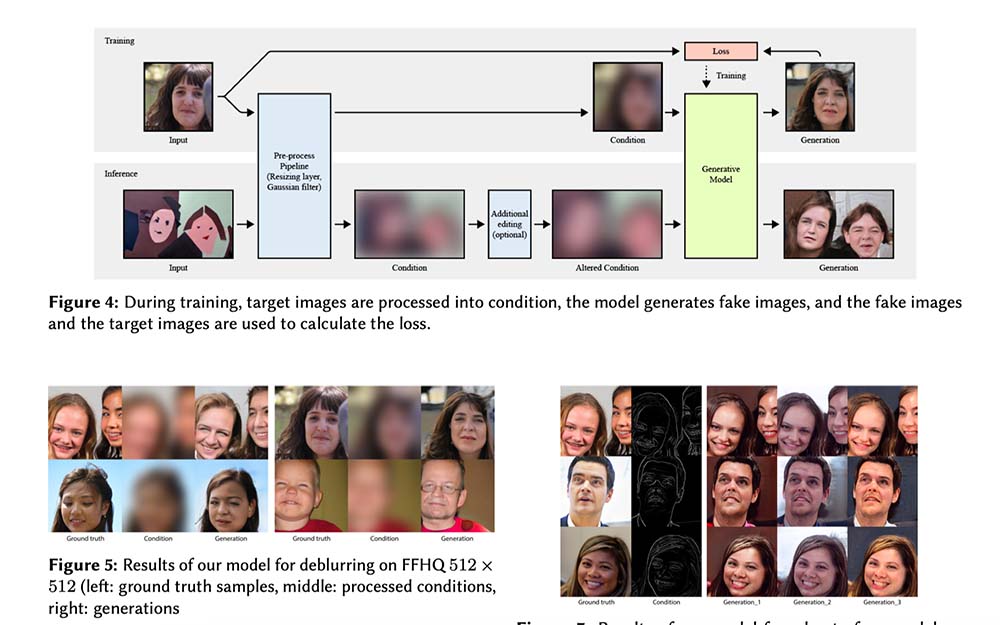

StyleGAN-Canvas: An augmented encoder for human-AI co-creation

We propose bridging the gap between StyleGAN3 and human-AI co-creative patterns by augmenting the latent variable model with the ability of image-conditional generation. The resulting model, StyleGAN-Canvas, solves various image-to-image translation tasks while maintaining the internal behaviour of StyleGAN3.

Category Research Subject Creative AI Date Mar, 2023